In the previous feed, I listed the common types of available load balancing. This time, I will analyze each type in more detail and address some questions you might encounter during interviews on this topic.

Pros, Cons, Use when of each Load Balancing

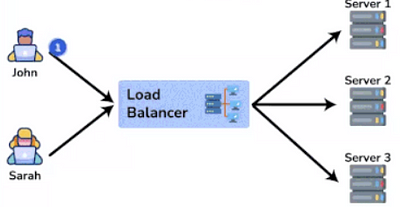

1. Round Robin

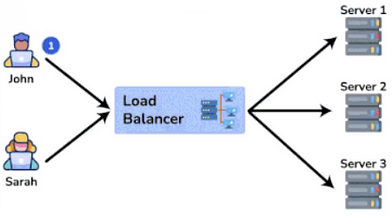

The Round Robin algorithm operates on a cyclic basis, evenly distributing incoming requests across a pool of servers. It sequentially assigns requests starting from the first server and loops back once reaching the last, ensuring a fair distribution of workload.

- Pros: This algorithms is that it is simple to implement, ensures fair distribution of requests, and works well with servers of equal capacity. It’s predictable and easy to understand.

- Cons: It doesn’t account for server load or request complexity, which can lead to uneven distribution in practice.

- When to use: Use this algorithm when you have a homogeneous server, stateless applications environment with similar hardware and the requests are generally uniform in complexity and resource requirements.

2. Least Connections

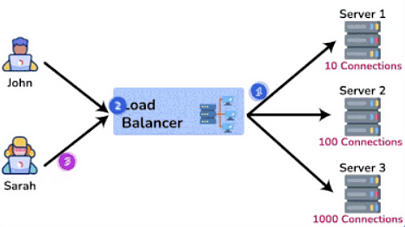

Operating on the principle of minimizing server load, the Least Connections algorithm directs incoming requests to the server with the fewest active connections. This strategy aims to evenly distribute the workload among servers, preventing any single node from becoming overloaded.

- Pros: Allows for distribution based on server capacities, more flexible than simple Round Robin.

- Cons: Still doesn’t account for real-time server load or request complexity.

- When to use: Use this algorithm when your server infrastructure is heterogeneous, with varying capacities, but request complexity is relatively uniform.

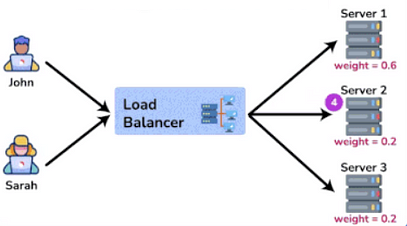

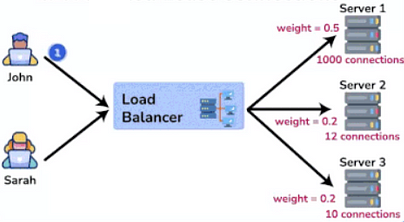

3. Weighted Round Robin

Requests are then distributed proportionally to these weights, ensuring that more powerful servers handle a larger share of the workload. Recognizing the varying capacities of individual servers, the Weighted Round Robin algorithm assigns weights to each server based on their capabilities.

- Pros: This algorithms distributes requests based on server capacity. Servers with higher weights handle more requests, while lighter servers receive fewer. It also allows customization by adjusting weights dynamically to reflect server performance, resource availability, or other factors.

- Cons: It requires defining and maintaining weights for each server, which can be challenging in dynamic environments. It also like standard Round Robin, WRR doesn’t inherently support session persistence, which may be necessary for certain applications

- When to use: Use this algorithm when you work well for systems with relatively stable workloads where weights can be tuned to match server performance.

4. Weighted Least Connections

Combining the principles of Least Connections and Weighted Round Robin, this algorithm directs requests to the server with the lowest ratio of active connections to its assigned weight. By considering both server capacity and current load, it optimizes resource utilization across the infrastructure.

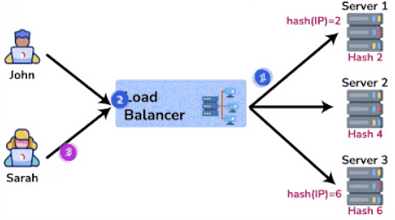

5. IP Hash

Focusing on session persistence and maintaining client-server affinity, the IP Hash algorithm determines the target server for a request based on the source and/or destination IP address. This approach ensures that requests from the same client are consistently routed to the same server, facilitating stateful communication.

- Pros: Ensures session persistence, which is crucial for stateful applications. Good for scenarios where client IP doesn’t change frequently.

- Cons: Can lead to uneven distribution if many users come from the same IP range.

- When to use: You need session stickiness and your application isn’t designed to be stateless.

6. Least Response Time

Efficiency and responsiveness are paramount in distributed systems, and the Least Response Time algorithm prioritizes servers with the lowest response time and minimal active connections. By directing requests to the fastest-performing nodes, it enhances user experience and optimizes resource utilization.

- Pros: Takes into account both the number of active connections and the response time, providing a good balance of load and performance.

- Cons: More complex to implement and can be more resource-intensive on the load balancer.

- When to use: You have a diverse set of applications with varying performance characteristics and want to optimize for user experience.

7. Random

Simplicity meets unpredictability in the Random algorithm, where incoming requests are randomly assigned to servers within the pool. While lacking the sophistication of other algorithms, Random load balancing can still offer a basic level of distribution in certain scenarios.

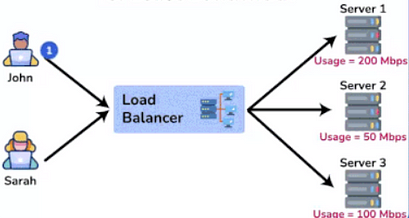

8. Least Bandwidth

In bandwidth-sensitive environments, the Least Bandwidth algorithm dynamically directs requests to the server currently utilizing the least amount of network bandwidth.

By preventing network congestion and ensuring efficient data transmission, it promotes smooth operation and stability.

Load Balancing System Design Interview Questions

Now, let’s see some System Design Interview Questions related to Load balancing algorithms

1. Which Load Balancing Algorithm will you use to handle requests at Scale in large infrastructure ?

In large-scale infrastructures, the most widely used and efficient load balancing algorithm is often the Weighted Round Robin.

This algorithm distributes incoming requests across multiple servers in a circular order, but with the added sophistication of assigning different weights to servers based on their capacities.

2. What is difference between Least Connection Method and Round Robin ?

The Least Connection Method and Round Robin are both popular load balancing algorithms, but they operate on different principles.

Round Robin distributes requests sequentially across all servers, assuming equal capacity and load. It’s simple and works well when servers have similar specifications and the requests are fairly uniform.

The Least Connection Method, on the other hand, directs new requests to the server with the fewest active connections. This makes it more adaptive to varying server loads and request complexities.

3. What is sticky sessions in load balancing and discuss its advantages and potential drawbacks ?

Sticky sessions, also known as session affinity, is a load balancing technique where a series of requests from a specific client are always routed to the same server that handled the initial request.

The main advantage of sticky sessions is maintaining state for applications that aren’t designed to be stateless, ensuring a consistent user experience for sessions that rely on server-side data.

However, sticky sessions come with drawbacks. They can lead to uneven load distribution if some users have particularly long or resource-intensive sessions.

They also complicate scaling and failover processes, as the failure of a server can disrupt all active sessions assigned to it.

In modern system design, the trend is towards stateless applications and storing session data in distributed caches or databases, which reduces the need for sticky sessions and allows for more flexible, resilient architectures.